Introduction

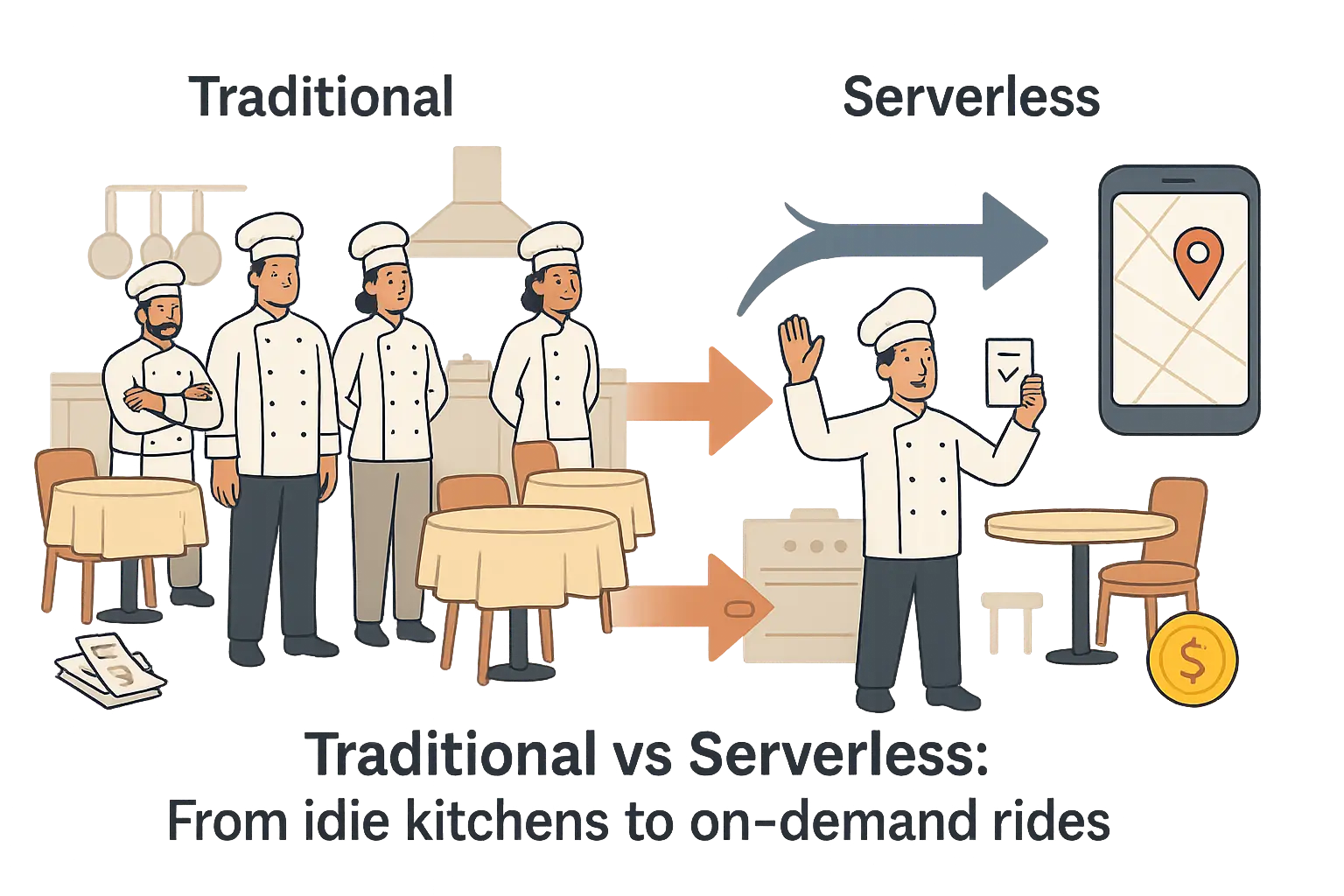

Imagine you run a restaurant. In the traditional setup, you hire full-time chefs, pay rent, buy groceries, and keep the kitchen open all day—even if customers don’t show up. It’s costly, inefficient, and unpredictable.

Now picture another model: your chefs appear only when a customer places an order. They cook, serve, and vanish. You pay only for the meal prepared. No idle costs, no wasted ingredients.

That’s Serverless Computing in the cloud world.

The Problem with Traditional Infrastructure

Before serverless, organizations had to:

Provision servers manually – setting up virtual machines, load balancers, and scaling groups.

Pay for idle capacity – servers remain online even when there’s little or no workload.

Manage maintenance – patching, monitoring, and updating OS or runtime.

Predict traffic – underestimating causes downtime; overestimating wastes cost.

This model made it difficult for startups and enterprises to innovate quickly.

The Serverless Shift

Serverless changes this by offering a fully managed, event-driven approach where you:

Write code (functions) instead of managing servers.

Let AWS handle provisioning, scaling, and high availability.

Pay only for the compute time when your code actually runs.

No servers to launch, patch, or monitor—just focus on building features.

As seen in the illustration , the chef and driver both appear only when needed, reinforcing the visual link between the restaurant and ride-hailing analogies. Serverless is like using a ride-hailing app instead of owning a car. You don’t worry about fuel, maintenance, or parking. You just request a ride when needed, pay for the distance, and move on. Similarly, serverless lets you run code only when required—without owning or managing infrastructure.

“Serverless doesn’t mean there are no servers — it means you don’t manage them.”

Many learners assume that ‘serverless’ means servers have disappeared. In reality, servers still exist — but AWS takes care of provisioning, scaling, maintenance, and fault tolerance behind the scenes.

What is Serverless Computing?

Serverless computing is a cloud-native execution model where the cloud provider (like AWS) runs your code, manages infrastructure, and automatically scales resources when needed.

In this model:

You focus only on writing your business logic (functions).

AWS automatically handles capacity, patching, and scaling.

You pay only for the actual execution time, not for idle resources.

Serverless allows you to move from managing servers to deploying code that reacts to events.

Example – How Serverless Works?

Let’s understand a simple serverless workflow through clear, sequential steps:

Step 1: Event Source

A user uploads an image to an Amazon S3 bucket, initiating the workflow.

Step 2: Automatic Trigger

The upload event automatically invokes an AWS Lambda function configured to respond to this event.

Step 3: Function Execution

The Lambda function executes instantly. It resizes, compresses, or processes the image as defined in the function code.

Step 4: Output Generation

The processed image is stored in another S3 bucket, for example, Processed-Images.

Step 5: Logging and Monitoring

AWS CloudWatch records the logs and performance metrics for this entire process, allowing you to monitor and troubleshoot if necessary.

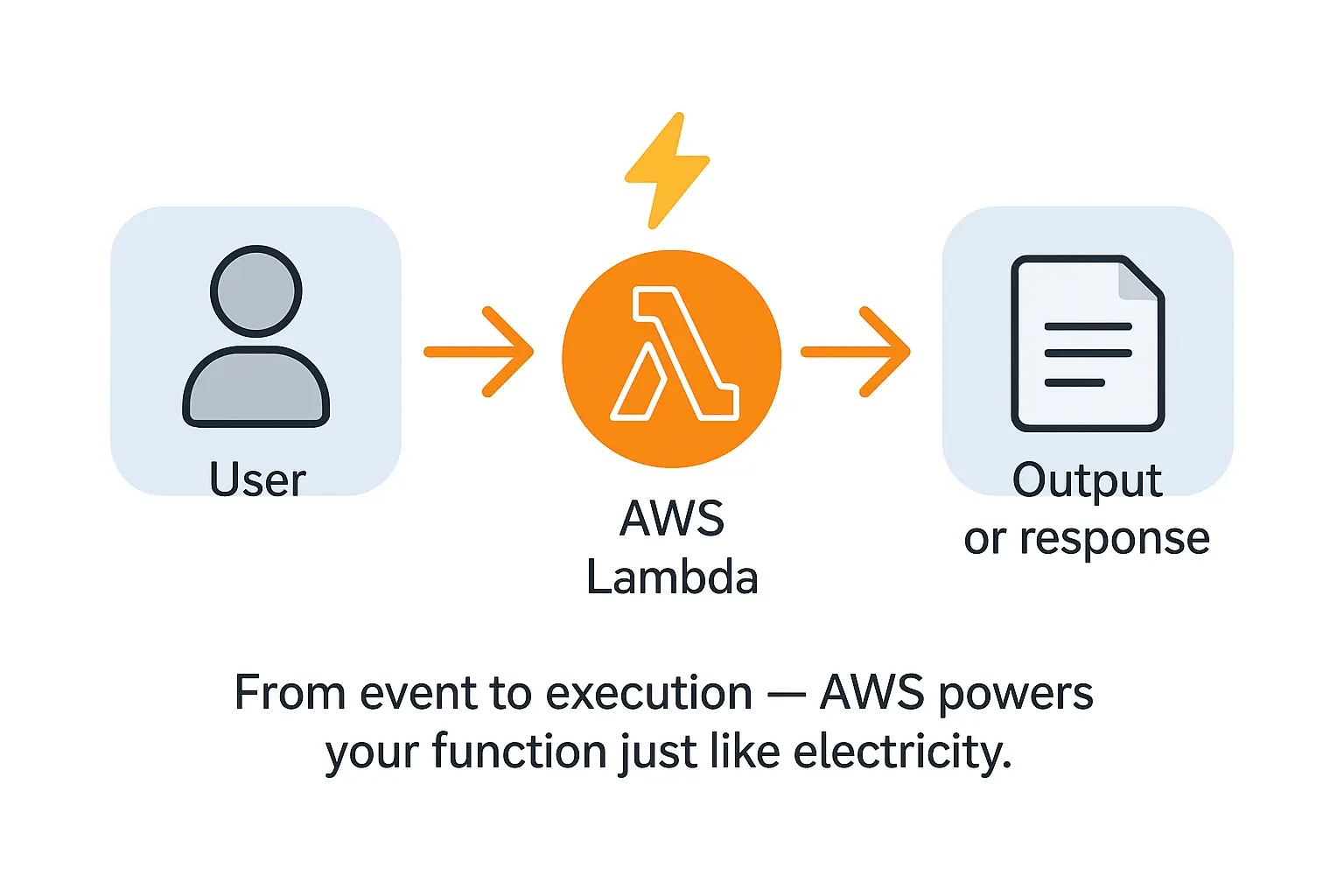

Serverless computing is like using electricity at home:

You don’t own or maintain a generator.

You simply flip the switch when you need power.

You’re charged only for what you consume.

AWS manages the power plant (infrastructure), while you just use the light (code execution). It’s always available, instantly scalable, and maintenance-free.

Advantages of Serverless

Would you rather pay rent for an idle office space or pay only for the hours your team actually works there? That’s the fundamental advantage of serverless computing — you stop paying for unused resources and start paying for actual usage.

Serverless computing offers benefits that go beyond cost savings. It transforms how teams build, deploy, and scale applications.

Key Advantages

1. No Server Administration

There’s no need to manage servers, operating systems, or runtime environments. AWS handles provisioning, updates, and security patches automatically.

Focus on writing and deploying code.

Reduce DevOps workload and maintenance overhead.

2. Automatic Scaling

Serverless architectures scale seamlessly based on incoming requests. Whether you have 1 request or 1 million, AWS automatically adjusts capacity.

No manual intervention or auto scaling configuration.

Handles unpredictable traffic efficiently.

3. Cost Efficiency

You pay only when your code runs — measured in milliseconds. No charges for idle capacity or over provisioning.

Ideal for workloads with variable demand.

Cost model based on usage, not uptime.

4. Built-in Fault Tolerance

High availability and fault tolerance are automatically integrated. AWS manages redundancy and ensures continuous operation.

Resilient by design.

No need to architect complex failover mechanisms.

5. Faster Development Cycle

With serverless, developers focus on business logic rather than infrastructure setup.

Rapid prototyping and innovation.

Simplifies CI/CD pipelines.

Serverless is like using a cloud taxi instead of owning a car:

Owning a car (Traditional servers): You pay for maintenance, parking, fuel, and insurance, even when it’s not being driven.

Hiring a taxi (Serverless): You pay only when you travel. No maintenance, no fuel worries, no idle costs.

AWS handles the engine, while you focus on reaching your destination — your application logic.

Key AWS Serverless Services

Compute: AWS Lambda

“Imagine a chef who appears only when you order food — cooks instantly and disappears.”

AWS Lambda is a serverless compute service that lets you run code without managing servers. You upload your function, define triggers (events), and AWS handles provisioning and scaling automatically.

Key Points:

Executes code in response to events (API calls, S3 uploads, DynamoDB updates, etc.).

Automatically scales based on request volume.

Pay only for execution time (in milliseconds).

Use Cases: Image processing, backend APIs, data transformations.

API Management: Amazon API Gateway

“Every restaurant needs a waiter who takes orders and delivers meals — API Gateway is that waiter for your apps.”

Amazon API Gateway acts as the front door for applications to access data, business logic, or functionality from backend services like Lambda or DynamoDB.

Key Points:

Fully managed service for creating, publishing, securing, and monitoring APIs.

Supports RESTful and WebSocket APIs.

Handles authentication, caching, rate limiting, and traffic management.

Use Cases: Building web/mobile app backends, connecting microservices.

Database: Amazon DynamoDB

“Imagine a smart pantry that restocks itself whenever supplies run low.”

DynamoDB is a fully managed NoSQL database service that provides fast, predictable performance and seamless scaling.

Key Points:

No servers or storage management.

Automatically scales throughput and storage.

Provides single-digit millisecond response time.

Offers on-demand and provisioned capacity modes.

Use Cases: User profiles, IoT data, gaming leaderboards, e-commerce catalogs.

Storage: Amazon S3 (Simple Storage Service)

“Think of an infinite warehouse where you can store anything — securely and reliably.”

S3 provides object-based storage for data of any size and type with 11 nines of durability.

Key Points:

Store and retrieve unlimited data.

Integrates seamlessly with Lambda, CloudFront, and DynamoDB.

Can trigger Lambda functions upon object uploads.

Pay per storage used and data transferred.

Use Cases: Backup, static website hosting, log storage, media files.

Messaging: Amazon SNS and SQS

“One shouts to many, one queues tasks — that’s SNS and SQS.”

SNS (Simple Notification Service) and SQS (Simple Queue Service) enable asynchronous communication between components.

Key Points:

SNS: Pub/Sub model — sends a single message to multiple subscribers (email, Lambda, SMS, etc.).

SQS: Queue model — stores messages until they are processed by consumers.

Together, they decouple systems and ensure reliable message delivery.

Use Cases: Event-driven pipelines, background job processing, alerting systems.

Workflow Orchestration: AWS Step Functions

“If Lambda functions are musicians, Step Functions is the conductor keeping everyone in harmony.”

Step Functions coordinate multiple AWS services into serverless workflows using visual state machines.

Key Points:

Define workflows with steps, decisions, and parallel branches.

Automates retries and error handling.

Integrates with Lambda, DynamoDB, ECS, and more.

Use Cases: Order processing, ETL pipelines, machine learning workflows.

Event Routing: Amazon Event Bridge

“Think of a smart air traffic controller routing events to the right destinations.”

Event Bridge is a serverless event bus that connects AWS services, SaaS apps, and custom applications.

Key Points:

Routes events from sources to targets (Lambda, Step Functions, SNS, etc.).

Enables event-driven architectures.

Supports schema registry and filtering.

Use Cases: Application integration, real-time automation, monitoring workflows.

Challenges and Limitations of Serverless

Serverless computing gives you speed and convenience, like a fast-food restaurant — quick service and no need for a kitchen. But what if you want a custom meal or complex dish? That’s where limitations appear.

Similarly, while serverless is fast, scalable, and cost-efficient, it’s not always the perfect fit for every workload.

Common Challenges

1. Cold Starts

When a Lambda function hasn’t been used recently, AWS needs to initialize a new instance before execution — this adds latency known as a cold start.

This can affect real-time or latency-sensitive applications.

Workaround: Use provisioned concurrency to keep functions pre-warmed.

2. Limited Execution Time

Lambda functions can run for a maximum of 15 minutes. This constraint makes serverless unsuitable for long-running processes such as big data ETL jobs or video rendering.

Workaround: Split long tasks into smaller functions or use AWS Batch / ECS for heavy workloads.

3. Debugging and Monitoring Complexity

Since functions execute in ephemeral environments, traditional debugging tools and access to system logs are limited.

Workaround: Use AWS CloudWatch Logs, AWS X-Ray, or structured logging for better observability.

4. Vendor Lock-In

Serverless functions are deeply tied to the provider’s ecosystem (e.g., AWS-specific services, APIs, and SDKs). Migrating to another provider can be challenging.

Workaround: Use open standards or frameworks like Serverless Framework, Terraform, or Knative for portability.

5. Resource and Configuration Limits

AWS imposes limits on memory (max 10 GB), deployment package size, and concurrent executions. Exceeding these may require service adjustments.

Workaround: Optimize code and memory allocation; use asynchronous patterns.